Recent News: DNAMIC announced Number 1 of the Top Costa Rica Custom Software Developers by Clutch.co – Learn More Details

Recent News: DNAMIC announced Number 1 of the Top Costa Rica Custom Software Developers by Clutch.co – Learn More Details

$7.42 billion

The global healthcare ERP market, which includes SAP solutions, was estimated at USD 7.42 billion in 2023 and is expected to grow at a CAGR of 7.2% from 2024 to 2030.

Enterprises in healthcare and life sciences have been trying to tap into AI’s full potential for years, but one big hurdle has always stood in the way: SAP data integration. Integrating SAP data into modern platforms like Databricks was never simple. It required complicated workarounds, slow processes, and a lot of frustration.

This challenge is finally fading. With the new SAP Databricks Integration, those obstacles are starting to disappear.

In this post, we will look at how companies used to handle Databricks integration, what this new connection brings to enterprises using SAP, and how it opens the door to smarter AI agents and automated workflows.

Before this native integration, enterprises faced significant hurdles when trying to connect SAP data with Databricks:

The result? Slow insights, fragmented data ecosystems, and limited AI innovation.

With the new native integration between SAP and Databricks, sharing data has never been easier. The usual roadblocks that made AI adoption difficult are finally gone, allowing businesses to work with their data more seamlessly.

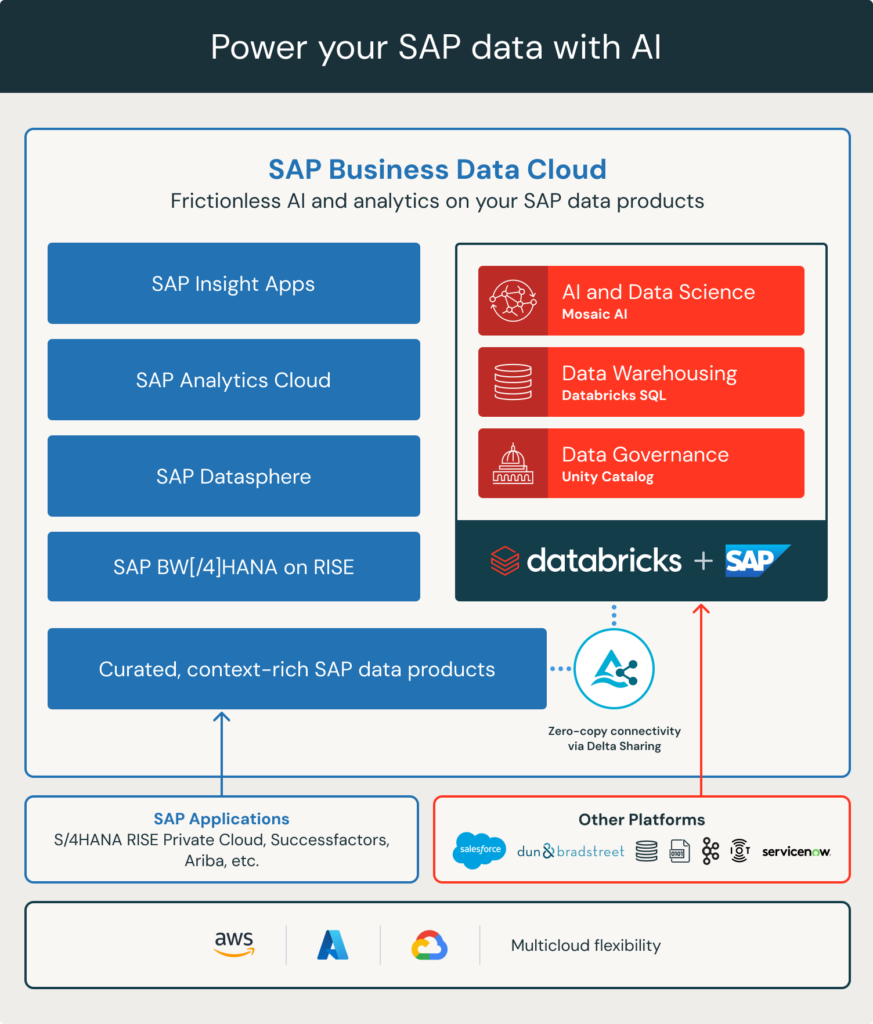

Image Source: Introducing SAP Databricks

This transformation is powered by several key features that make integration smoother and more efficient:

Unity Catalog helps keep data secure and well-managed across both platforms. Companies can enforce consistent rules, making compliance with regulations much easier.

The Business Data Fabric Architecture connects SAP data across ERP, CRM, and other systems. This allows different teams to analyze information from a single, unified source.

The Result? Data flows faster. Insights become richer. Compliance stays intact.

The integration of SAP and Databricks is going to transform how businesses use their data to make better decisions. No more frustrating workarounds, long delays, or trying to make disconnected systems work together. With this integration, data becomes easier to access, simpler to manage, and fully prepared for AI-powered insights that drive real results.

This integration is not just about making data easier to share. It is about enabling smarter automation with AI agents that can analyze real-time analytics in life sciences through SAP and Databricks.

Here are some key ways businesses are putting AI agents to work:

The SAP Databricks native integration removes many technical barriers, but unlocking its full potential requires more than just connecting the systems. Enterprises need a modern data architecture that supports real-time data sharing and advanced AI workloads.

This is where a Data Warehouse or Data Lake becomes essential. Without a unified platform, even the most advanced integrations will struggle to deliver AI-powered insights.

A modern Lakehouse architecture with Databricks gives businesses the foundation they need by:

Providing a single source of truth, ensuring that teams across the organization work with accurate, up-to-date data.

At DNAMIC, we specialize in:

Our deep expertise in healthcare and life sciences means we understand your challenges and how to solve them with the power of AI.

The new SAP Databricks native integration has the potential to transform enterprises, but only if they are prepared to take full advantage of it. Real-time insights, AI-driven automation, and seamless workflows are now possible, but they all rely on having a solid data foundation.

For organizations that want to use AI to improve patient outcomes, speed up research, and streamline operations, the first step is clear. Investing in the right data infrastructure is what will make these innovations a reality.

Connect with DNAMIC to explore how we can help you unlock the full potential of your data and drive meaningful advancements in your industry.

Healthcare

Bioinformatics

Genomics

Pharmaceuticals

Biotechnology

Medical Devices

Health Informatics

HealthTech

DNAMIC | Databricks Data Solutions is a premier data services and technology company dedicated to transforming the healthcare and life sciences industries through data-driven AI solutions.

Healthcare

Bioinformatics

Genomics

Pharmaceuticals

Biotechnology

Medical Devices

Health

DNAMIC | Databricks Data Solutions is a premier data services and technology company dedicated to transforming the healthcare and life sciences industries through data-driven AI solutions.

San Diego, California, United States

This will close in 0 seconds

This will close in 0 seconds

This will close in 0 seconds

This will close in 0 seconds